文章来源于互联网:Polygon Introduces AI Chatbot Assistant, Polygon Copilot

Polygon, a well-known developer that provides Ethereum scaling solutions, has leaped into the future of Web3. They have introduced an AI chatbot assistant, Polygon Copilot, to their platform.

What is Polygon Copilot?

Imagine a personal guide that can help you navigate the expansive ecosystem of decentralized applications (dApps) on Polygon.

“Where can I find an AI-powered guide to Polygon and web3?” … 📡

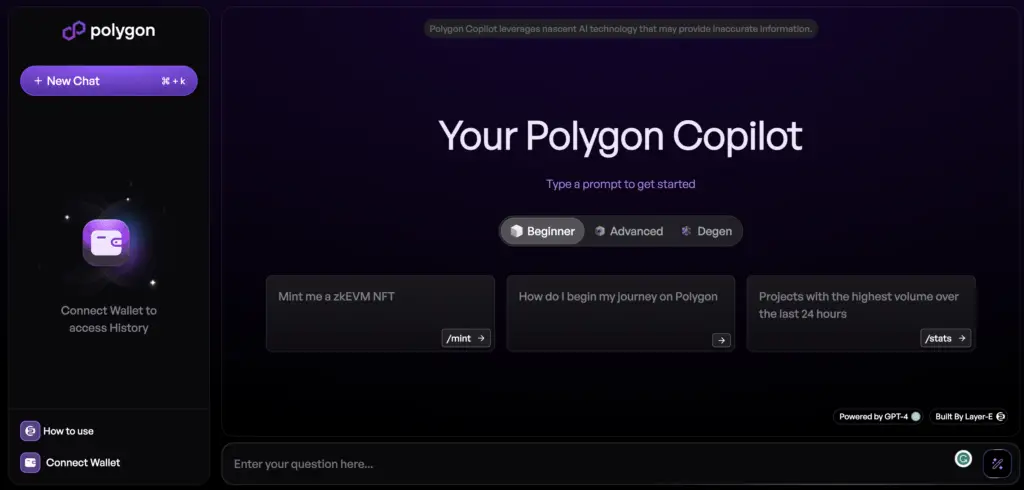

Introducing Polygon Copilot, powered by @LayerEhq and @OpenAI GPT-4. AKA, your friendly AI guide trained on all Polygon docs and the web3 universe.

More: https://t.co/GZ1qBx93nq pic.twitter.com/Nu7q13fcIJ

— Polygon (Labs) (@0xPolygonLabs) June 21, 2023

Polygon Copilot is just that! It’s an AI assistant that can answer your questions and provide information about the Polygon platform.

It comes with three different user levels: Beginner, Advanced, and Degen, each designed for users at different stages of familiarity with the ecosystem.

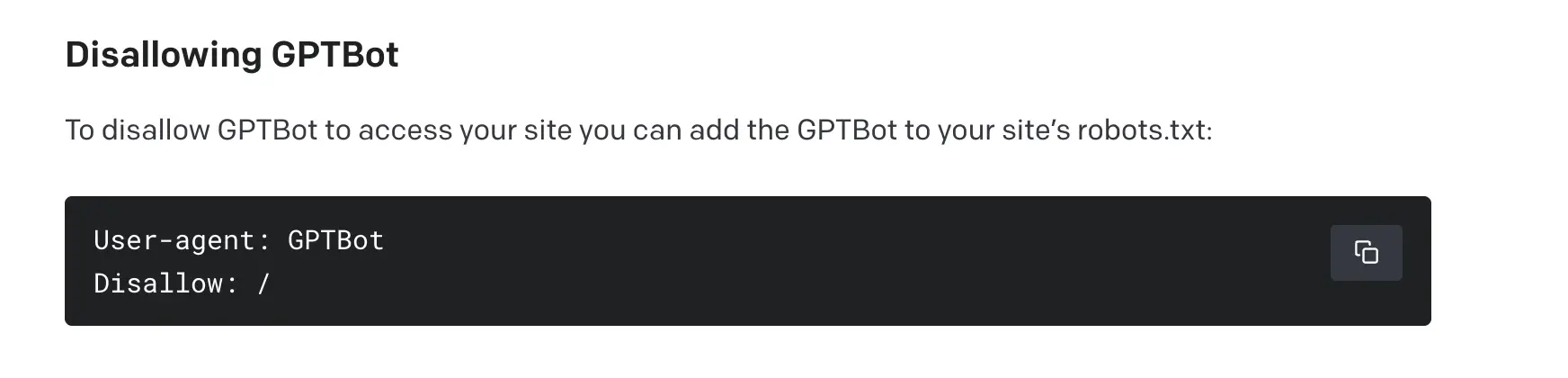

The assistant is built on OpenAI’s GPT-3.5 and GPT-4 models and is incorporated into the user interface of Polygon.

One of the main goals of the Copilot is to offer insights, analytics, and guidance based on the Polygon protocol documentation.

A standout feature of Polygon Copilot is its commitment to transparency. It discloses the sources of the information it gives, which enables users to verify the information and explore the topic further.

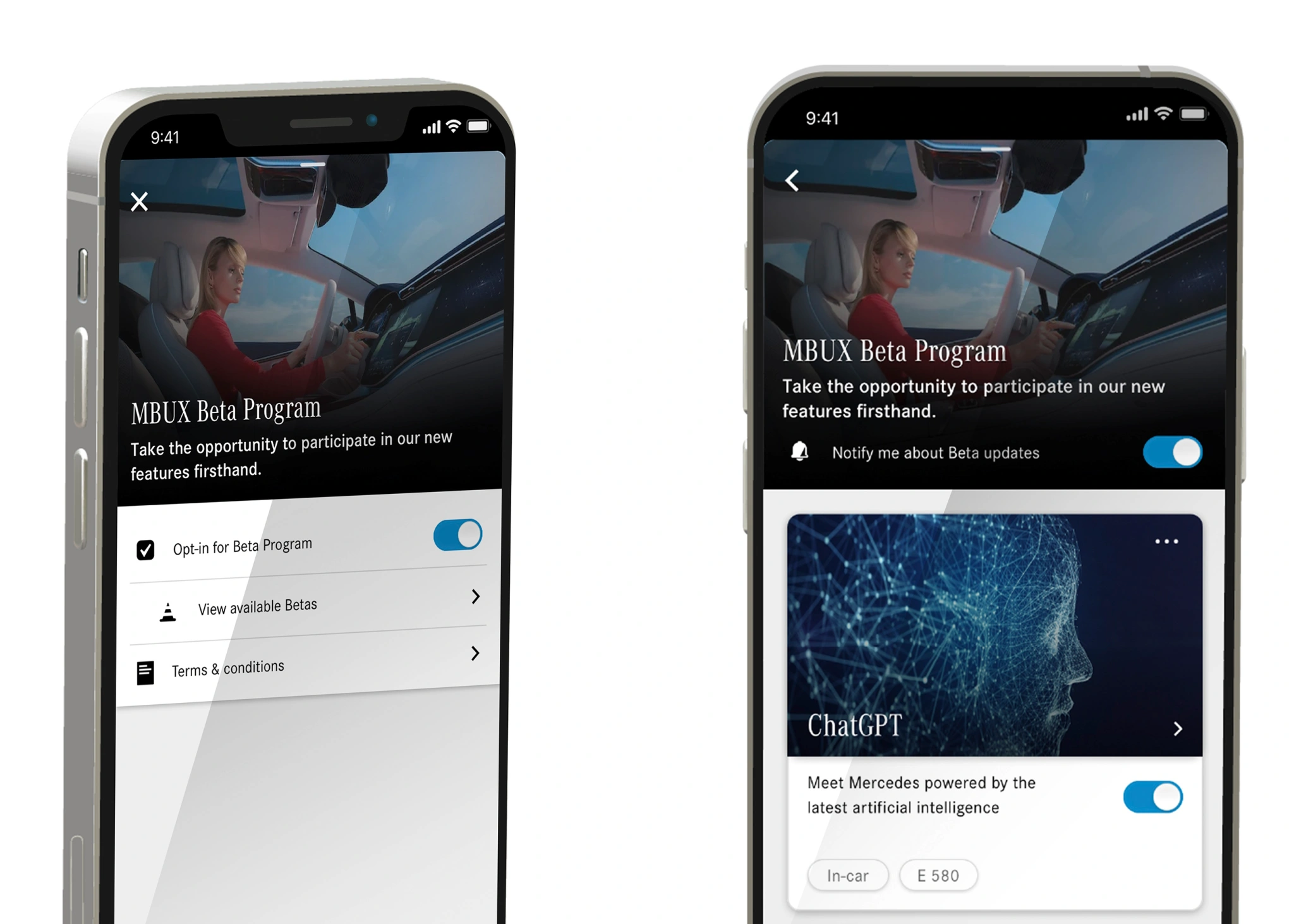

Polygon’s step towards integrating AI technology is part of a growing trend in the Web3 world.

Other companies including Alchemy, Solana Labs, and Etherscan are also harnessing the potential of AI.

Using Polygon Copilot

To start with Polygon Copilot, users need to connect a wallet that will serve as the user account.

This account is given credits for asking questions, with new credits added every 24 hours.

And what sets Polygon Copilot apart? It’s not just any plain-speaking AI; it has a flair of its own. Ask it about the top NFT project on Polygon, and you’ll get a response full of personality.

However, it’s essential to remember that like all AI technology, Polygon Copilot isn’t perfect.

Users are cautioned that the AI may provide inaccurate information and to take the chatbot’s answers with a grain of salt.

Polygon has set limits on the number of responses the chatbot can generate to prevent spamming and overload.

What’s Polygon All About?

Polygon presents itself as ‘Ethereum 2.0’, addressing scalability issues within the Ethereum blockchain.

It enhances the value of any applications built on the Ethereum blockchain.

The introduction of the AI assistant is a leap forward for the platform. Whether you are a beginner looking for basic guidance or an advanced user trying to build complex products, Polygon Copilot is there to assist.

It’s also handy for analysts seeking accurate data about NFTs and dApps.

Web3 and the Promise of Data Ownership

Polygon’s use of AI reflects the evolution of the internet, known as Web 3.0. This version of the internet promises safety, transparency, and control over the data created by users.

Web 3.0 operates on blockchain technology, a decentralized system that removes corporate access to private data.

Blockchains were born alongside Bitcoin, the first cryptocurrency, aiming to break free from corporations’ control over our data.

In the spirit of Web 3.0, platforms like Polygon allow users to control access to their data and attach value to it, enhancing data ownership.

As the tech world moves forward, innovations like Polygon Copilot highlight the growing intersection between artificial intelligence and blockchain technology, redefining user experience in the process.