文章来源于互联网:Learn AI or Risk Losing Your Job, Warns New IBM Study

By Mukund Kapoor, a noted AI enthusiast and contributing author at GreatAiPrompts.Com, specializing in topics related to marketing, artificial intelligence, and innovative AI-driven technologies.

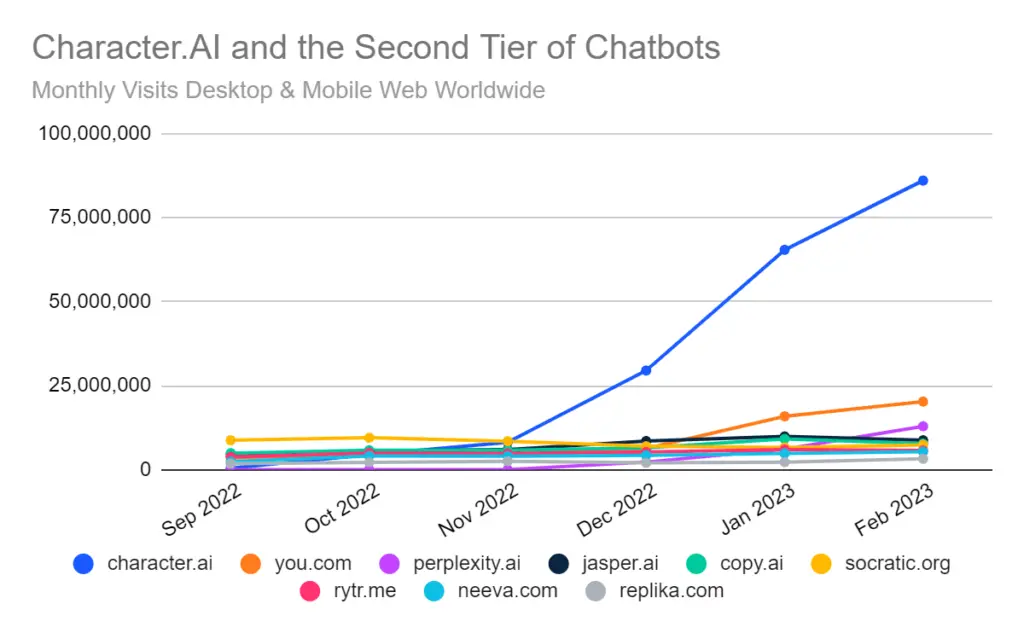

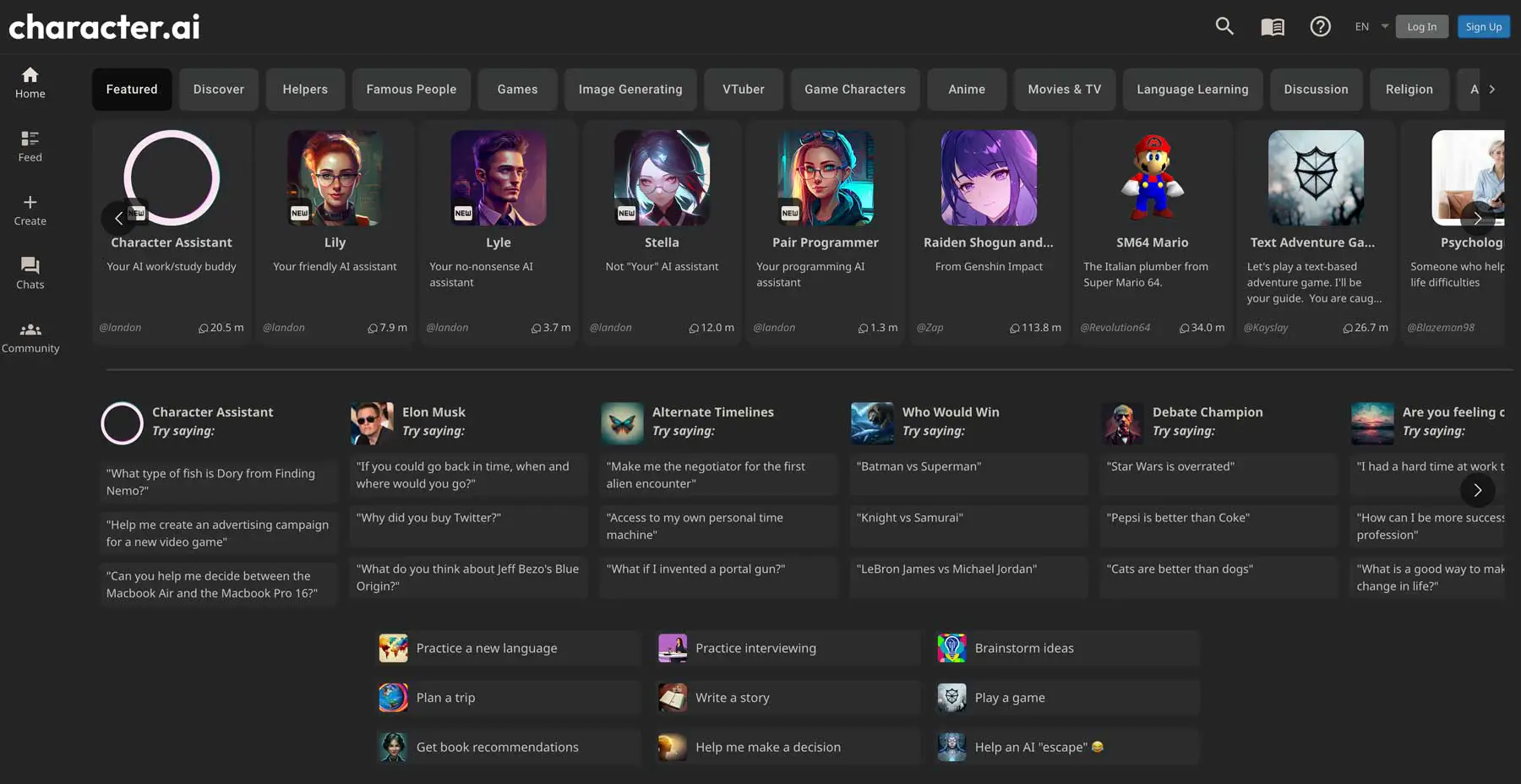

Lots of people talk about AI, or artificial intelligence. It helps with things like writing and coding. Some people worry it might take their jobs. But a study by IBM says something different.

IBM looked at data from big bosses and workers in many countries. The data says AI will change jobs but not in a bad way.

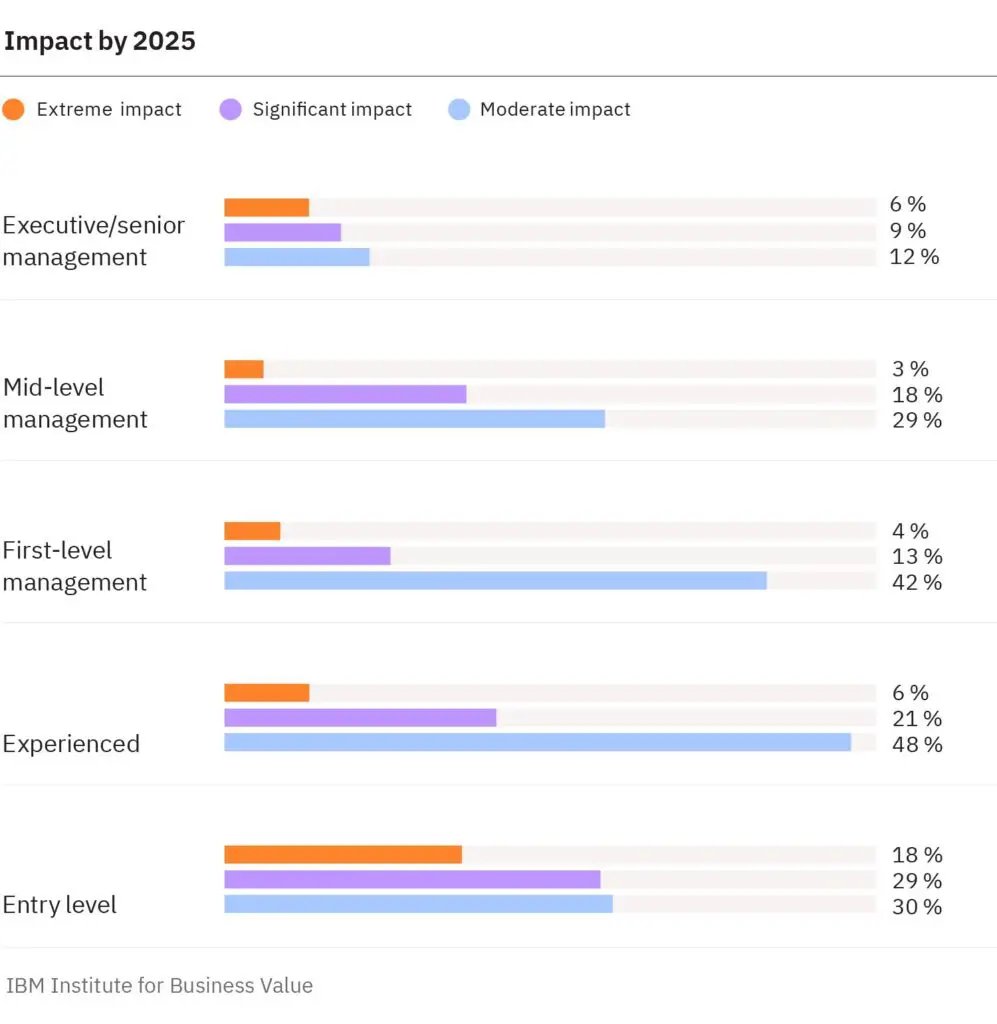

Big bosses think 40% of workers will need to learn new things in the next three years because of AI. That’s 1.4 billion people! But they think AI will help jobs, not take them away.

87% of these big bosses think AI will make jobs better. IBM also found that people who learn AI do better in their jobs.

Skills like science and math were very important in 2016. But now, tools like ChatGPT make those skills less important. People need to work well in teams and be ready to change. These are the most important skills in 2023, says IBM.

Also in order to help and elevate employees to get a competitive edge in their jobs, IBM suggests these three frameworks:

“We’ve identified three key priorities that can help them elevate employees and gain a competitive edge:

– Transform traditional processes, job roles, and organizational structures to boost productivity and enable business and operating models that reflect the new nature of work.

– Build human-machine partnerships that enhance value creation, problem-solving, decision-making, and employee engagement.

– Invest in technology that lets people focus on less time-consuming, higher value tasks and drives revenue growth.”

IBM Study

IBM’s message is clear: AI will not take people’s jobs. People who learn AI will do better. If you don’t learn AI, someone else who knows AI might get your job. So, learning AI is a good idea.

For more in-depth coverage and analysis on the latest developments, visit our Breaking News section. Stay connected and join the conversation by following us on Facebook, and Instagram. Subscribe to our daily newsletter to receive the top headlines and essential stories delivered straight to your inbox. If you have any questions or comments, please contact us. Your feedback is important to us.

评论