文章来源于互联网:AI Chatbots Falling Short of EU Law Standards, a Stanford Study Reveals

A recent study conducted by researchers from Stanford University concludes that current large language models (LLMs) such as OpenAI’s GPT-4 and Google’s Bard are failing to meet the compliance standards set by the European Union (EU) Artificial Intelligence (AI) Act.

Understanding the EU AI Act

The EU AI Act, the first of its kind to regulate AI on a national and regional scale, was recently adopted by the European Parliament.

It not only oversees AI within the EU, a region housing 450 million people but also sets the precedent for AI regulations globally.

However, as per the Stanford study, AI companies have a considerable distance to cover to attain compliance.

Compliance Analysis of AI Providers

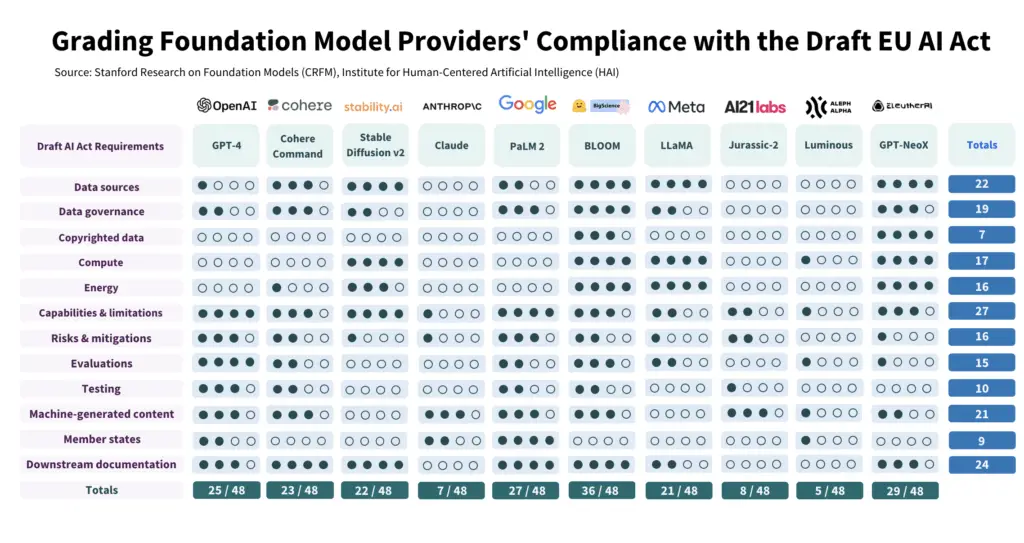

In their study, the researchers evaluated ten major model providers against the 12 requirements of the AI Act, scoring each provider on a 0 to 4 scale.

Stanford’s report says:

“We present the final scores in the above figure with the justification for every grade made available. Our results demonstrate a striking range in compliance across model providers: some providers score less than 25% (AI21 Labs, Aleph Alpha, Anthropic) and only one provider scores at least 75% (Hugging Face/BigScience) at present. Even for the highest-scoring providers, there is still significant margin for improvement. This confirms that the Act (if enacted, obeyed, and enforced) would yield significant change to the ecosystem, making substantial progress towards more transparency and accountability.”

The findings displayed a significant variation in compliance levels, with some providers scoring below 25%, and only Hugging Face/BigScience scoring above 75%.

This suggests a considerable scope for improvement even for high-scoring providers.

The Problem Areas

The researchers highlighted key areas of non-compliance, including a lack of transparency in disclosing the status of copyrighted training data, energy consumption, emissions, and risk mitigation methodology.

They also observed a clear difference between open and closed model releases, with open releases providing better disclosure of resources but posing bigger challenges in controlling deployment.

The study concludes that all providers, regardless of their release strategy, have room for improvements.

A Reduction in Transparency

In recent times, major model releases have seen a decline in transparency.

OpenAI, for instance, chose not to disclose any data and compute details in their reports for GPT-4, citing competitive landscape and safety implications.

Potential Impact of the EU AI Regulations

The Stanford researchers believe that the enforcement of the EU AI Act could significantly influence the AI industry.

The Act emphasises the need for transparency and accountability, encouraging large foundation model providers to adapt to new standards.

However, the swift adaptation and evolution of business practices to meet regulatory requirements remain a major challenge for AI providers.

Despite this, the researchers suggest that with robust regulatory pressure, providers could achieve higher compliance scores through meaningful yet feasible changes.

The Future of AI Regulation

The study offers an insightful perspective on the future of AI regulation.

The researchers assert that if properly enforced, the AI Act could substantially impact the AI ecosystem, promoting transparency and accountability.

As we stand on the threshold of regulating this transformative technology, the study emphasises the importance of transparency as a fundamental requirement for responsible AI deployment.