文章来源于互联网:Spotting AI-Written Text Gets Easier with New Research

Researchers have found a new method to determine whether a piece of text was penned by a human or an artificial intelligence (AI).

This new detection technique leverages a model named RoBERTa, which helps to analyze the structure of text.

Finding the Differences

The study revealed that the text produced by AI systems, such as ChatGPT and Davinci, displays different patterns compared to human text.

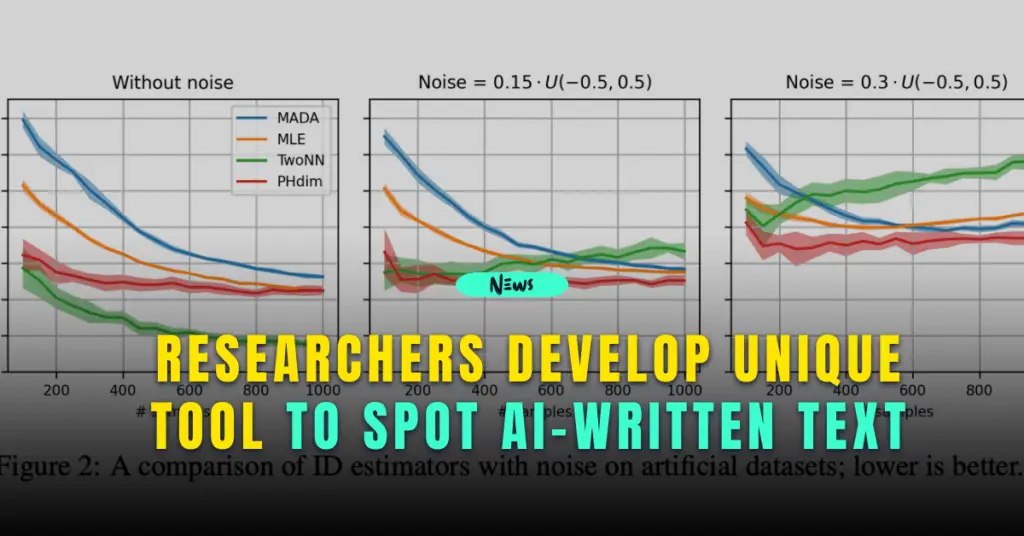

When these texts were visualized as points in a multi-dimensional space, it was found that the points representing AI-written text occupied a lesser area than the points representing human-written text.

Using this key difference, researchers designed a tool that can resist common tactics employed to camouflage AI-written text.

The performance of this tool remained impressive even when it was tested with various types of text and AI models, showing high accuracy.

However, its accuracy decreased when the tool was tested with a sophisticated hiding method called DIPPER.

Despite this, it still performed better than other available detectors.

One of the exciting aspects of this tool is its capability to work with languages other than English. The research showed that while the pattern of text points varied across languages, AI-written text consistently occupied a lesser space than human-written text in every specific language.

Looking Ahead

While the researchers acknowledged that the tool faces difficulties when dealing with certain types of AI-generated text, they remain optimistic about potential enhancements in the future.

They also suggested exploring other models, similar to RoBERTa, for understanding the structure of text.

Earlier this year, OpenAI introduced a tool designed to distinguish between human and AI-generated text.

Although this tool provides valuable assistance, it is not flawless and can sometimes misjudge. The developers have made this tool publicly available for free to receive feedback and make necessary improvements.

These developments underscore the ongoing endeavors in the tech world to tackle the challenges posed by AI-generated content. Tools like these are expected to play a crucial role in battling misinformation campaigns and mitigating other harmful effects of AI-generated content.